Note

This Python sample may be slow depending on the event rate of the scene and the configuration of the algorithm. We provide it to allow quick prototyping. For better performance, look at the corresponding C++ sample.

Dense Optical Flow Sample using Python

The Python bindings of Metavision Computer Vision API can be used to compute dense optical flow of objects moving in front of the camera. The dense optical flow is computed for every events, contrary to what is done in the Sparse Optical Flow sample where flow is estimated on clusters of events. To have a summary of the optical flow algorithms available, check the “Available Optical Flow Algorithms” section below.

The sample metavision_dense_optical_flow.py shows how to use the python bindings of Metavision CV SDK to implement

a pipeline for computing the dense optical flow.

The source code of this sample can be found in <install-prefix>/share/metavision/sdk/cv/python_samples/metavision_dense_optical_flow

when installing Metavision SDK from installer or packages. For other deployment methods, check the page

Path of Samples.

Expected Output

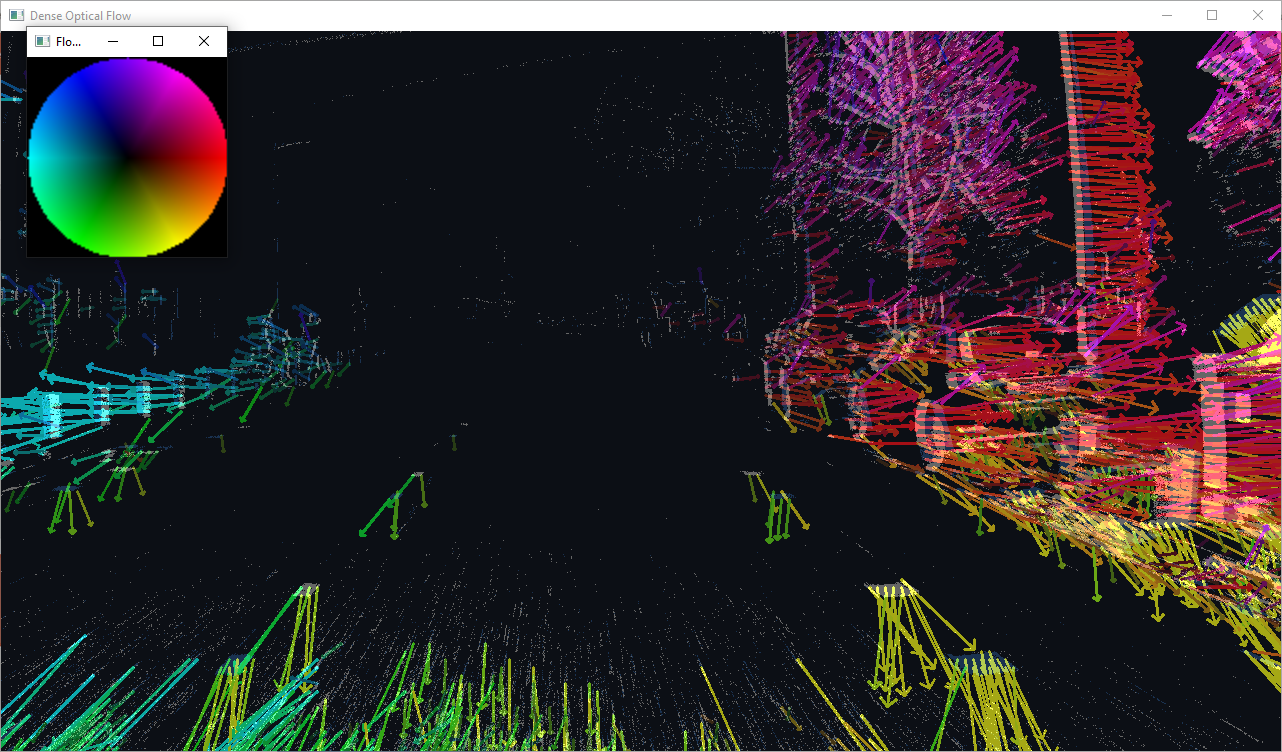

The sample visualizes events and the output optical flow using colors indicating the edge normal direction and the magnitude of motion:

The sample can also generate a video with the output flow.

How to start

To start the sample based on recorded data, provide the full path to a RAW or HDF5 event file (here, we use a file from our Sample Recordings):

python metavision_dense_optical_flow.py -i driving_sample.raw --flow-type TripletMatching

To check for additional options:

python metavision_dense_optical_flow.py -h

Available Optical Flow Algorithms

This sample enables comparing several dense optical flow algorithms: Plane Fitting flow, Triplet Matching flow and Time Gradient flow. The SDK API also offers alternatives with Sparse Optical Flow as well as Machine Learning Flow inference, all described here.