Note

This C++ sample has a corresponding Python sample.

Events Integration using C++

The sample metavision_events_integration.cpp shows how to use the Metavision SDK Core API to generate a grayscale image

using a simple integration algorithm and display this image in a window.

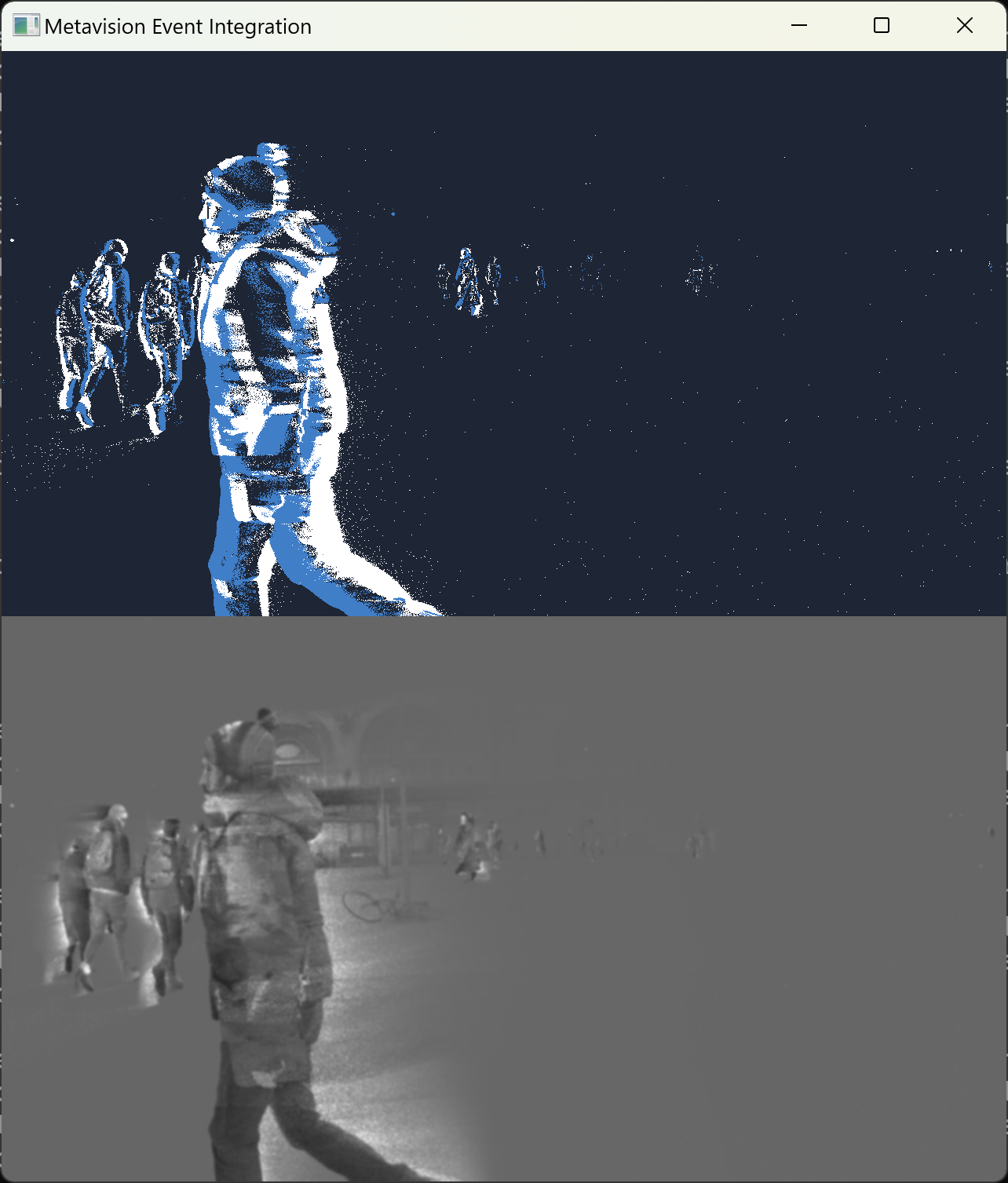

The sample displays the following views, computed over the same time period:

The event frame, showing the events received during the period. The event frame is displayed at the top using the Dark color map.

The events integration frame, showing the light intensity integrated from the events received during the period. The events integration frame is displayed at the bottom in grayscale, if selected.

The contrast map, showing the total contrast of events received during the period. The contrast map is displayed at the bottom in grayscale, if selected.

The user can alternate the view between events integration frame and contrast map by pressing the ‘t’ key.

One issue we face when integrating events is that boundary conditions, in this case the light intensity values at the start of the integration,

are unknown. This typically leads to a trail left behind moving objects, which can be observed with this sample by setting a very large decay

factor, for instance --decay-time 100000000. A conventional approach to circumvent this issue consists in applying a decay factor to make the

integrated signal converge back to neutral gray after some time. However, a difficulty is that the decay time typically needs to be tuned to

the dynamics of the considered objects.

Another challenge faced when integrating events is the noise, which is typically due to background noise and other perception non-idealities.

Several approaches can be used to reduce the noise, for instance by using a gaussian blur filter. In this sample, this can be done using the

command-line argument --blur-radius. For static cameras, one can also use a “diffusion weight” to refine the estimation at a given pixel

based on the intensity estimated at adjacent pixels. In this sample, this can be done using the command-line argument --diffusion-weight.

The source code of this sample can be found in <install-prefix>/share/metavision/sdk/core/cpp_samples/metavision_events_integration

when installing Metavision SDK from installer or packages. For other deployment methods, check the page

Path of Samples.

The integration algorithm used in this sample is quite simple and therefore leads to limited results. More advanced algorithms, in particular Machine Learning, can be used to improve the quality of the integrated image, as implemented in the event to video inference sample.

Expected Output

The sample visualizes CD events from an event-based device or a recording (RAW or HDF5 event file) after integration:

How to start

First, compile the sample as described in this tutorial.

To start the sample based on the live stream from your camera, run:

Linux

./metavision_events_integration

Windows

metavision_events_integration.exe

To start the sample based on recorded data, provide the full path to an event file (here, we use the file pedestrians.hdf5

from our Sample Recordings):

Linux

./metavision_events_integration -i pedestrians.hdf5

Windows

metavision_events_integration.exe -i pedestrians.hdf5