FAQ

If you don’t find an answer to your question in this FAQ, as a Prophesee customer, you can ask your question:

to the whole Prophesee event-based community in our Community Forum

to Prophesee Support Team using our support request ticketing tool

Note

While the forum is an excellent place to seek help and share insights, priority support is provided to Premium Support members through support tickets. Additionally, to stay updated with new posts and join the conversation in the forum, you can click the ‘follow’ button in the right menu to follow the community channel.

Table of Contents

-

How can I build a custom USB camera using Prophesee sensors?

How to Interface Prophesee Sensors with an MCU, MPU, or FPGA/MPSoC?

What kind of extension USB cable should I use for my camera?

To avoid flicker events, which non-flickering light source should I use?

What is the Quantum Efficiency / Spectral Response of Prophesee Sensors?

What is the maximum illuminance a Prophesee event-based sensor can support without being damaged?

What is the SNR (Signal to Noise ratio) of my event-based sensor?

-

Requirements and Installation

Which operating systems are supported by Metavision SDK?

Metavision SDK supports Linux Ubuntu 22.04 / 24.04 and Windows 10 and 11 64-bit. For other OS, you can compile OpenEB which contains the source code of our Open modules. If you want to compile the whole SDK (including our Advanced modules with event-based algorithms) on another platform, you should access our SDK Pro offer). Contact us for a quote.

Can I use Metavision SDK Advanced modules on other platforms than the ones supported?

To do so, you need to acquire the source code of the Advanced modules with our SDK Pro offer. Contact us for a quote.

How to interface Prophesee USB EVKs with an ARM or other non-AMD processor based platform (e.g. Nvidia Jetson, Raspberry, etc.)?

You can compile the source code of the SDK on the platform of your choice by following the Installation of SDK on Linux Ubuntu from Sources. Note that some adjustments to the install guide or to the code itself may be required (specially for non-Debian Linux).

As the plugin source code of our EVKs is open sourced in OpenEB, it is possible to interface our EVKs with any other platform and thanks to Open modules you should be able to stream, decode, display events and more. To do so, you just have to compile OpenEB on your platform and connect your EVK.

Note that certain older EVK versions are no longer supported in the latest OpenEB release. For instance, EVK1 has been deprecated since release 4.0. If you’re using EVK1, it’s required to work with SDK 3.1 instead. To download this version, access our SDK 3.1 page of the Download Center.

Also, if you have a Prophesee product with Gen4.0 sensor, you may need additional support. Please contact us in that case.

Note

If you are using a partner camera, you may need the corresponding plugin source code which should be compiled on your platform.

In the case of SilkyEVCam camera for example, you can request the plugin source code via Century Arks website.

How can I retrieve information on installed Prophesee software?

You can retrieve the information on the installed software and their versions with these tools:

metavision_platform_info (it also provides you information about the connected cameras)

Can I use a Virtual Machine (VM) to work with my camera?

Prophesee cameras require USB 3.0, and due to limited support of USB 3.0 by Virtual Machines, your display and USB will not perform as expected, therefore we do not recommend using Virtual Machines.

Software Packaging

What is Metavision SDK Pro pricing model?

SDK Pro is included at no additional cost with every Prophesee USB EVK purchased after October 7th, 2024. It is also available for standalone purchase. Contact us for a quote.

What is the difference between SDK Pro and OpenEB?

OpenEB comes with the source code of the fundamental modules of Metavision SDK whereas Metavision SDK Pro comes with the full source code of Metavision SDK including advanced event-based algorithms and Metavision Studio. In addition, SDK Pro grants advanced access to Prophesee Knowledge Center and 2 hours premium support.

How can I have access to a free version of the SDK?

Starting with version 5.0, Metavision SDK is not free anymore. It is included with every Prophesee USB EVK purchase and as a standalone purchase. If you want a free software, you can use OpenEB though it is limited to the Open modules. Another option is to use Metavision SDK 4.6 that is still available and free to use:

If you are a Prophesee customer, retrieve it in the Knowledge Center Download section.

If you want to acquire a Prophesee camera or the SDK Pro, Contact us for a quote.

Live data and recorded files

What kind of data do we get from a Prophesee sensor?

Prophesee sensor are producing Contrast Detection (CD) events. They represent a response of a pixel to a change of illumination and are of 2 types:

CD ON events correspond to positive change : from dark to light

CD OFF events correspond to negative change : from light to dark

Those events are then streamed in two distinct proprietary formats:

Explore the following resources to dive deeper into this topic:

Can I record data from my event-based camera? Which format is used for data recording?

You can record data with Metavision Studio. Data are recorded to RAW format. However, Metavision Studio allows you to export your recording in AVI format.

Alternatively, you can use Metavision Viewer to record RAW files. As you have an access to the source code of this tool, you can see the Metavision SDK in use and even enhance it to fit your needs.

In addition, you can convert files to the following formats:

CSV format using metavision_file_to_csv

What are the .tmp_index files created when I open a RAW file?

This index file stores metadata about where timestamps are located within the file. More information on those files can be found in our page about RAW index files.

How do I know what is the event encoding format of a file?

You can find the EVT format (EVT2, EVT21 or EVT3 for RAW files, ECF for HDF5 event files) of your file by one of those methods:

Use the metavision_file_info application.

If the file has the .raw extension, open the file in any editor and look for the key “evt” or “format” in the header.

How can I check the duration and the event rate of my file?

You can use metavision_file_info application:

metavision_file_info -i YOUR_FILE.raw

Why do event timestamps differ when reading a RAW file with Standalone decoders versus other SDK applications?

When RAW file are converted to CSV using our Standalone decoder samples, you may notice that the timestamps differ compared to when the same file is converted using the File to CSV Converter tool or some other tools like metavision_file_info.

This discrepancy arises due to a process called “timestamp shifting” which is explained in detail on this page of our documentation. In summary:

Standalone decoders do not apply timestamp shifting.

Other SDK tools, such as the CSV converter and File Info, apply timestamp shifting.

How can I read IMU data with Metavision SDK?

Some of Prophesee products are able to record IMU (Inertial Measurement Unit) data along with the sensor native data stream. This IMU is not part of our sensors but is mounted on another board (Invensense MPU-9250) within some of our EKVs. Until version 2.0.0, IMU was supported in Metavision SDK, but this feature was not leveraged by any of our partner’s camera, so we decided that supporting it was not relevant for us and we removed it since version 2.1.0 of Metavision SDK.

If you want to use the IMU or if you have RAW files that contain IMU data that you want to decode, the easiest way is to use Metavision 2.0.0. Please check our Knowledge Center page on IMU data format.

If you want to read IMU data with the latest version of Metavision SDK, you will have to program your own events decoder. Some guidance to do so are stated as well on the aforementioned KC page.

Programming

How can I change the logging level at runtime?

Metavision uses its own logging mechanism with four levels: TRACE, INFO, WARNING, ERROR.

Any message that has a higher or equal level will be enabled to pass through, and any message that has a lower level will be ignored.

The default level (INFO) can be changed by setting the environment variable MV_LOG_LEVEL. This can help you troubleshoot problems.

For example, to get a more detailed log, TRACE level can be used:

Linux

export MV_LOG_LEVEL=TRACE

Windows

set MV_LOG_LEVEL=TRACE

Can I use Prophesee Event-Based cameras with other Machine Vision libraries?

Yes, for example Prophesee offers an acquisition interface for MVTec HALCON. Installation and getting started instructions can be found in the Knowledge Center MVTec HALCON Acquisition Interface page. There is also a video giving an overview of the bridge and how to use it. If you are interested in other libraries, please let us know.

Why is my compiled C++ sample running slowly?

If your C++ code sample appears to be running slowly, double-check that the compilation was set up in Release mode. See our page on sample compilation for detailed compilation steps.

An additional factor contributing to the slow execution of your sample could be an excessive number of events being captured by your sensor. This could be caused by your lighting conditions, the focus adjustment or some camera settings that should be adjusted to your project. You can find some information to help you enhance your setup and settings in our page on recording with Metavision Studio.

Where can I find C++ headers?

The C++ headers are located in the installation directory organized by modules. For example for the CV module:

<install-prefix>/include/metavision/sdk

├── cv

│ ├── algorithms

│ │ └── detail

│ ├── configs

│ ├── events

│ │ └── detail

│ └── utils

│ └── detail

└── other modules...

where <install-prefix> refers to the path where the SDK is installed:

On Ubuntu:

/usrwhen installing with the packages and/usr/localwhen building from source code with the deployment stepOn Windows:

C:\Program Files\Prophesee

In addition to the headers, each of the folders optionally has a detail folder that contain the code that has been removed

from the main headers in order to make them more readable. The detail folders include method implementations, helpers, macros, etc.

which are not meant to be included directly in your application.

How can I perform a software pixel reset on an EVK with an IMX636 or GenX320 sensor?

Pixel reset is an operation than can be done electrically on some Prophesee EVKs.

To perform this operation, you need to send a specific signal to a dedicated pin on the EVK. For the IMX636 sensor,

this pin is called TDRSTN, while for the GenX320 sensor, it’s called PXRSTN.

For additional details, refer to the sensor datasheet and your camera’s manual.

Additionally, the pixel reset can be triggered by setting a specific register flag on the sensor itself.

For the IMX636 sensor, this is done via the px_td_rstn flag, and for the GenX320 sensor, via the px_sw_rstn flag.

This method is particularly useful for the EVK4 camera, which lacks the TDRSTN pin.

To modify the register, you can use the I_HW_Register facility,

as demonstrated in the following code excerpts for the IMX636 sensor:

Using HAL C++ API:

std::unique_ptr<Metavision::Device> device; Metavision::I_HW_Register *hw_register = device()->get_facility<Metavision::I_HW_Register>(); if (hw_register){ hw_register->write_register("PSEE/IMX636/roi_ctrl", "px_td_rstn", 0); }Using SDK Stream C++ API (Camera class):

Metavision::Camera camera; Metavision::I_HW_Register *hw_register = camera.get_device().get_facility<Metavision::I_HW_Register>(); if (hw_register){ hw_register->write_register("PSEE/IMX636/roi_ctrl", "px_td_rstn", 0); }For C++ API, make sure to include the

I_HW_Registerfacility:#include <metavision/hal/facilities/i_hw_register.h>.

Using HAL and SDK Core Python API:

device = initiate_device(path=args.input_path) hw_register = device.get_i_hw_register() if hw_register is not None: hw_register.write_register("PSEE/IMX636/roi_ctrl", "px_td_rstn", 0)

For the GenX320 sensor, you should replace PSEE/IMX636/roi_ctrl with PSEE/GENX320/roi_ctrl

and px_td_rstn with px_sw_rstn in the examples provided above.

Note

The pixel reset function generates pixel reset events similar to External Trigger events, but their ID is 1 instead of 0.

However, for IMX636 sensor these events are dumped only when the trigger channel is enabled. More details can be found

in your sensor datasheet.

Hardware and Setup

How can I retrieve information on plugged cameras?

You can retrieve the information on the plugged cameras with metavision_platform_info tool.

How can I upgrade the firmware of my camera?

Prophesee EVKs and Partner’s camera are provided with pre-installed firmware. The specific firmware version depends on the camera model and its acquisition date. Firmware upgrades are generally optional unless explicitly stated as required in the Metavision SDK Release Note or if you encounter a message such as: “The EVK camera with serial XXX is using an old firmware version. Please upgrade to the latest version.” In such cases, updating the firmware is necessary to ensure compatibility and optimal performance.

For Prophesee EVKs, the upgrade procedure is described in the Knowledge Center EVKs firmware upgrade page.

How can I build a custom USB camera using Prophesee sensors?

Both our EVK3 and EVK4 share a hardware and software design based on the Cypress EZ-USB CX3 chipset. This component converts MIPI CSI-2 data from the sensor into USB 3.0 output, facilitating data transfer to host systems.

If you are interested in building your own USB camera, we recommend exploring our Reference Design Kit 3 (RDK3) This kit includes the EVK3 hardware, which is based on the e-con CX3 Reference Design Kit. It also provides our custom CX3 firmware source code, along with the complete Bill of Materials (BOM), detailed design files, and schematics for our camera module, referred to as CCAM5

Note

This CCAM5 module provides a Prophesee IMX636 or GenX320 sensor with MIPI D-PHY CSI-2 compatible interface in a 30mm x 30mm PCB module format. It contains the necessary power supply and control circuits required by the sensor whilst presenting standard control and data interfaces to allow straightforward integration into a system.

Please note that to ensure compatibility between the Metavision SDK and your custom USB camera, you will need to build a specific camera plugin. This can be efficiently achieved by customizing the Prophesee plugin for CX3-based camera to suit your hardware setup.

For more information or to discuss your requirements, please feel free to contact our sales team.

How to Interface Prophesee Sensors with an MCU, MPU, or FPGA/MPSoC?

Our EVK3 and EVK4 are designed to interface with a host machine via USB. However, connecting these kits to an MCU, MPU or FPGA/MPSoC development kit via USB is not the most efficient approach to fully leverage their capabilities.

Instead, we would recommend to check our Embedded Starter Kits:

STM32F7 Starter Kit (with GenX320 sensor)

supported sensor: GenX320

data streaming: sensor data is streamed using the CPI protocol, received via the DCMI parallel interface of the board.

included resources: sensor driver and an application to visualize events on the board LCD

These kits enable direct data streaming from the sensor via their MIPI or CPI interfaces, providing a more efficient and high-performance connection.

If you are interested in exploring other platforms, please feel free to contact our sales team.

What kind of extension USB cable should I use for my camera?

If the USB cables provided with the camera is too short for your use, you will have to use an extension cable, but you have to be careful as you will quickly reach the USB specs length limits for the support of USB 3.0 super speed. Hence, we recommend to use to use an active USB 3.0 extension cable with an external power supply. Here is an example of such a cable: StarTech.com Active USB 3.0 Extension Cable with AC Power Adapter

To avoid flicker events, which non-flickering light source should I use?

The best non-flickering light source is any halogen lighting. If you want LED source, you must double check it is not flickering (lots of them are using PWM modulation for dimming, and produce flicker). We successfully used Dracast Camlux Pro Bi-Color.

How can I mask the effect of crazy/hot pixels?

Our last generations of sensors (Gen4.1, IMX636 and GenX320) provide a Digital Event Mask that allows to silent a list of pixels. This feature can be used to turn off crazy/hot pixels and can be seen in action in metavision_hal_showcase sample.

For some information, see the dedicated page on Digital Event Mask

Note also that the sample metavision_active_pixel_detection can be used as well to detect the hot pixels coordinates.

What is the root cause of tail effect or the delayed response observed for negative polarity events in low-light Conditions with IMX636 sensor?

These phenomena are closely tied to the concept of “latency”.

While the term “latency” can have various interpretations, we provide detailed clarifications in this KC article .

In essence, the sensor’s latency decreases in bright lighting conditions, enabling faster event detection, while it increases under low-light conditions, affecting responsiveness.

This behavior can be attributed to the sensor’s photodiode integration time—the duration required to detect a contrast change. In high-light conditions, the integration time is shorter, enabling quicker detection of events, whereas in low-light conditions, the integration time is longer, leading to slower responsiveness.

However, the occurrence of the tail effect or delayed response exclusively during OFF events with the IMX636 sensor is an inherent characteristic of the sensor. More specifically, this relates to the pixel architecture/design. For instance, such behavior is almost nonexistent in the GenX320 sensor.

To reduce this “tail effect”, you could:

use the biases, by tuning bias_hpf. Like explained in the doc, increasing it should help to remove some of the OFF events.

use the Event Trail Filter provided by the ESP (Event Signal Processing) feature available in the IMX636 sensor (integrated into EVK4 and certain EVK3 models). The TRAIL option retains the first event of a burst following a polarity transition while suppressing subsequent events of the same polarity generated within the specified Trail threshold period. This filter can impact both ON and OFF events. However, since only your OFF events appear to exhibit this trail effect, applying the filter should yield the desired behavior in your scenario.

What is the Quantum Efficiency / Spectral Response of Prophesee Sensors?

This information can be found in the Datasheet of our sensors, but we share some indicative figures in a dedicated page in the Knowledge Center.

What is the maximum illuminance a Prophesee event-based sensor can support without being damaged?

We did not characterize a maximum illuminance accepted by our sensors but from our test, we observe that when exposed to 100 klux for a limited time the sensors are not damaged. Though, at this illuminance level, a number of pixel won’t be able to send any more event as their potentials will be quickly saturated.

What is the SNR (Signal to Noise ratio) of my event-based sensor?

The SNR would traditionally provide the temporal (or dark) noise on frame-based sensors. This is used as a metric for understanding various performance capabilities of specific sensors in various lighting conditions. As event sensors are tuned for the environment, traditional noise measurement metrics are irrelevant.

With event-based sensors, each pixel operates asynchronously with a refresh time managed by a parameter called bias_refr without an effective exposure time. This lack of an exposure time causes the sensor to not retain pixel values or specific charge values longer than it’s refresh cycle, therefore not providing data on charge accumulation over time.

While event sensors are still susceptible to electronic noise, the level of control a user has over the sensor by adjusting the bias values allows them to effectively filter out noise (and all other non-relevant event data) from the desired signal of the objects within the field of view. The typical noise you will see from an event sensor is an overactive (or underactive) pixel that displays events at a rate inconsistent with the rest of the sensor for the given application and environment. This can be typically accounted for with bias adjustments, event filtering with the ESP, or with data filtering during processing.

Troubleshooting

Windows installation issues

How to install EVKs driver on Windows, if the installation of unsigned drivers is disabled on my PC?

In certain instances, the installation of EVK drivers may not be permitted on your Windows PC. This depends generally on the policies applied by your IT department. If you are in that situation, you can temporally authorize the installation by following the steps described on this page: How to Install Unsigned Drivers on Windows

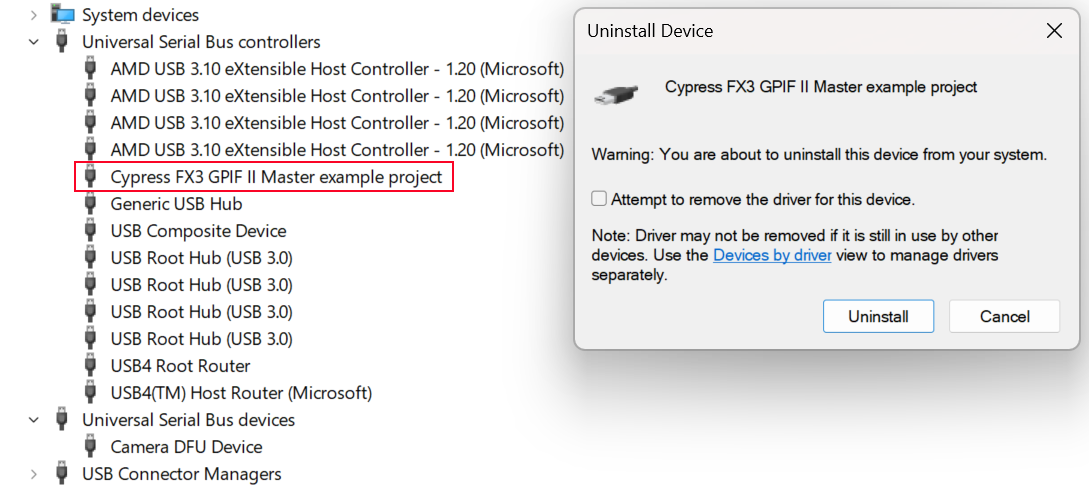

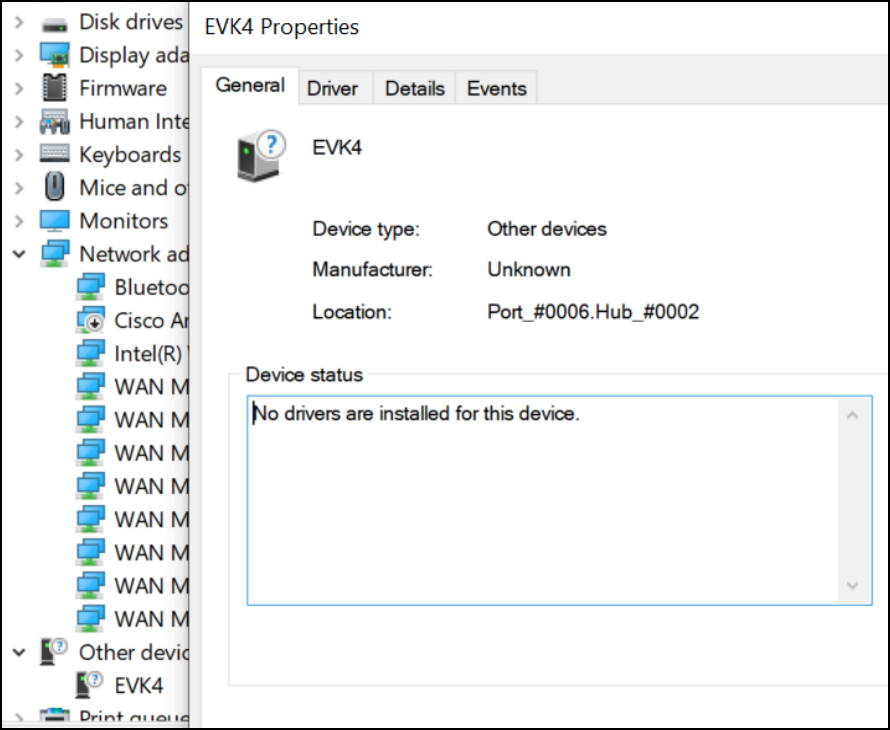

How to resolve the ‘No Drivers are Installed for this Device’ issue for an EVK in Windows Device Manager?

Sometimes, EVK drivers are copied in the system (either automatically by the Windows installer, or manually

with wdi-simple.exe in the case of OpenEB and SDK Pro), yet the EVK is not recognized. In that situation,

you should check the EVK properties in the Device Manager and see if the EVK shows a message similar to

No drivers are installed for this device:

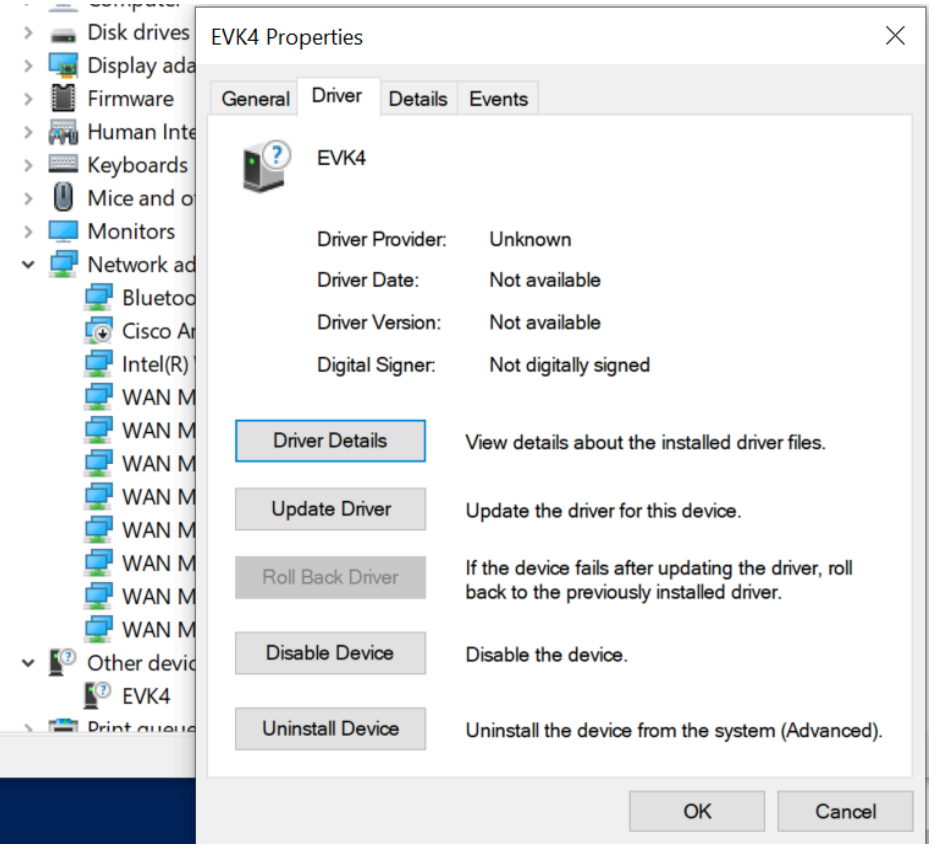

If so, follow those steps:

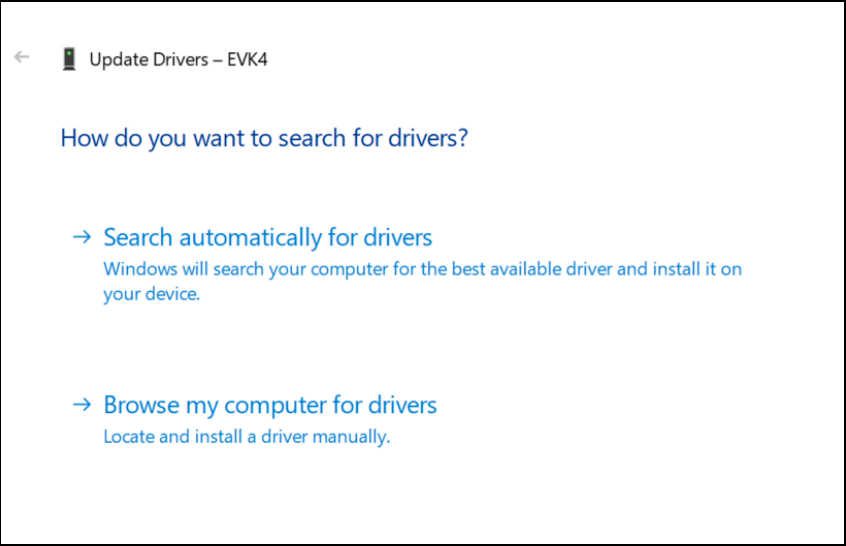

Select the

Drivertab and click onUpdate Driver:

Choose

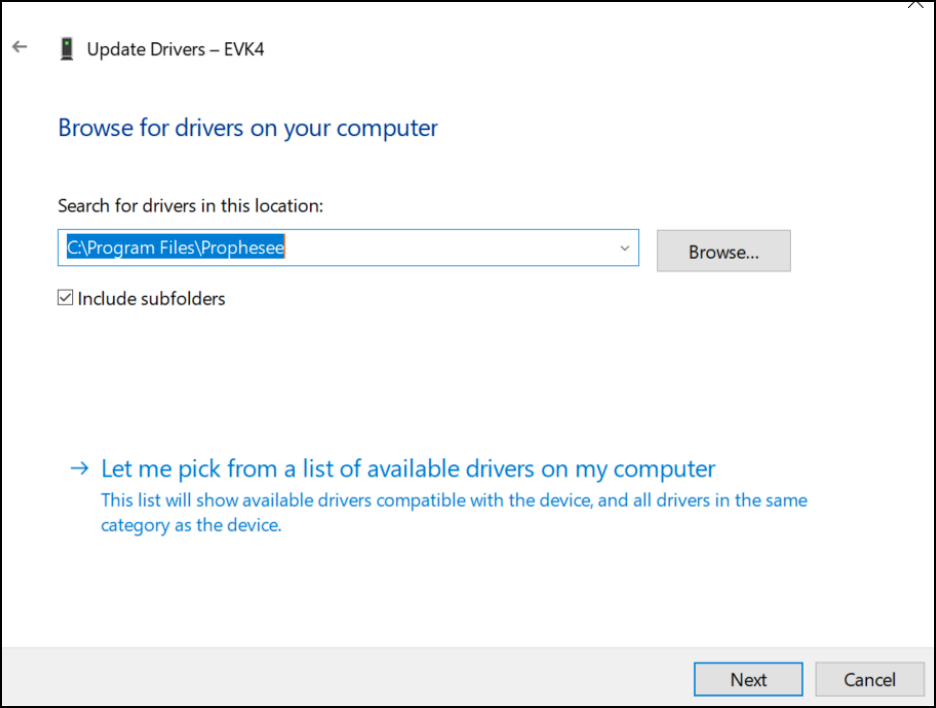

Browse my computer for drivers:

Select

Let me pick from a list of available drivers on my computerand click next:

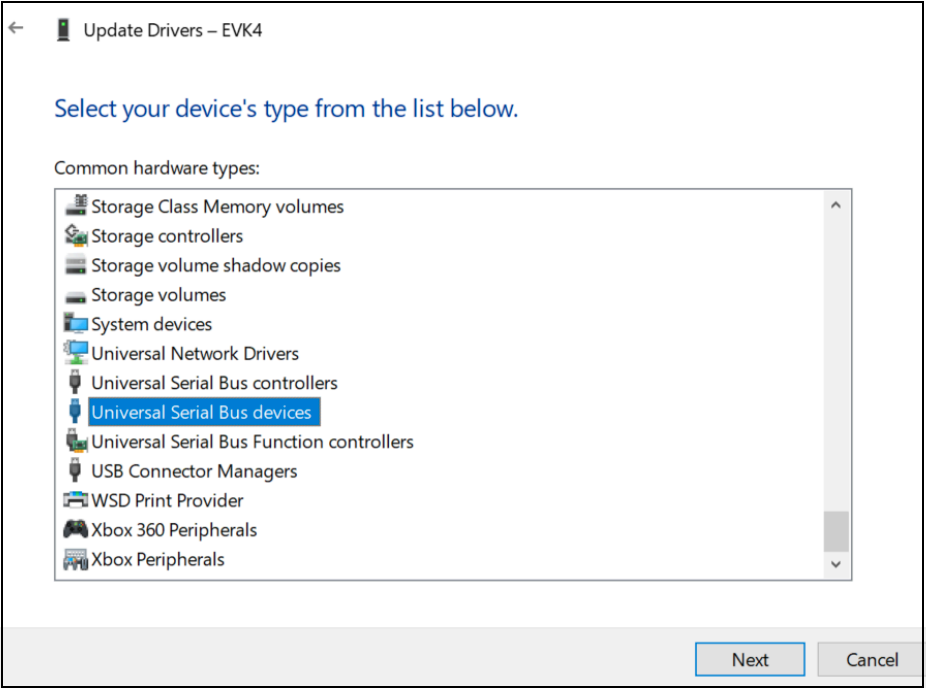

Go to

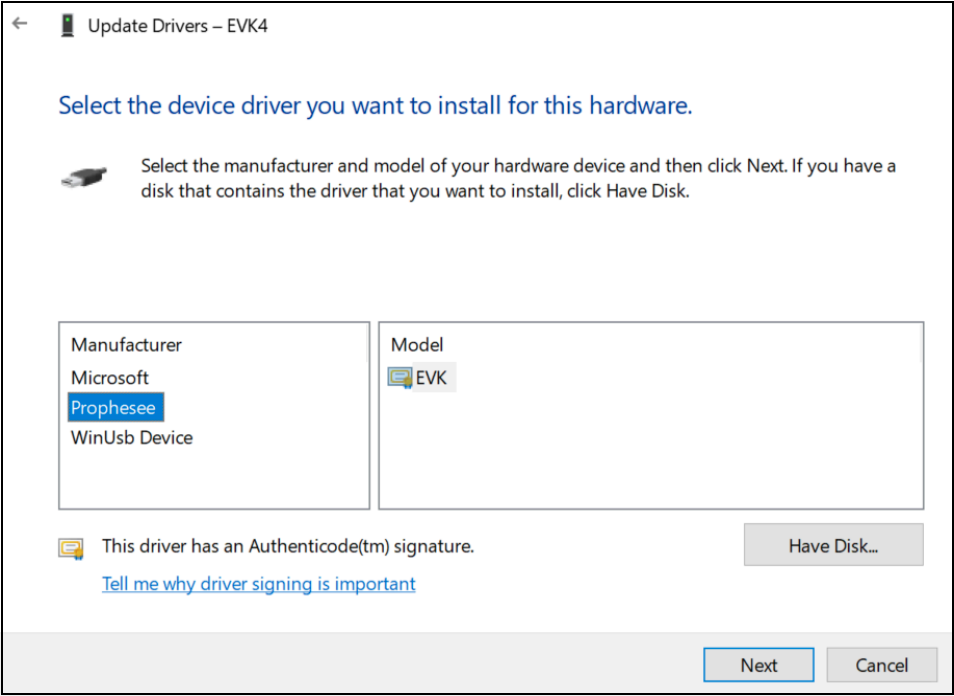

Universal Serial Bus device:

Choose the EVK driver from Prophesee:

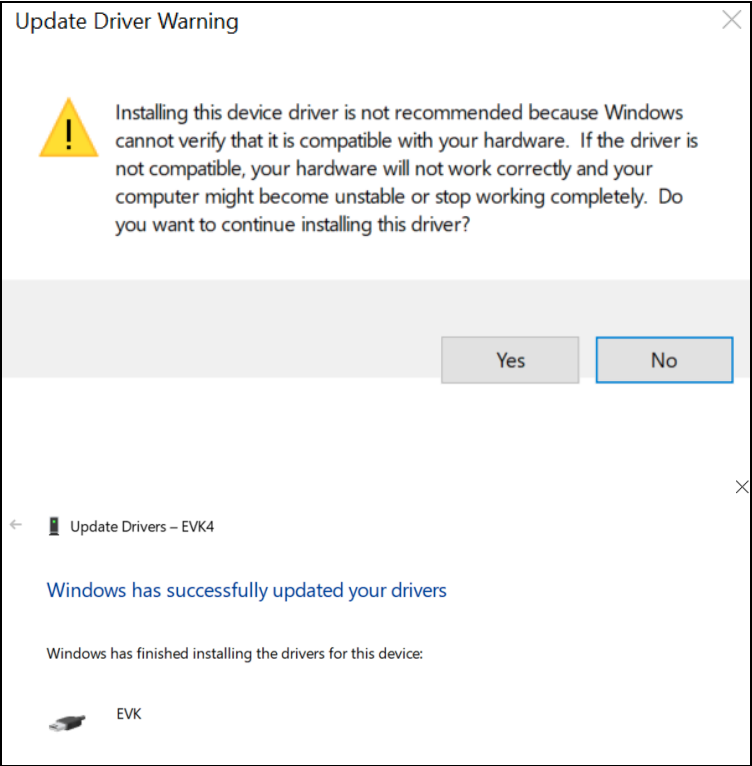

You will get a warning, but the driver should be successfully updated:

Following these steps should resolve the EVK driver recognition issue. If the problem persists, contact us by creating a support ticket with as much information as possible about your camera and platform (camera model, operating system, SDK version etc.)

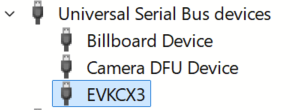

How to resolve ‘No camera found’ for EVK3 or iDS partner’s camera on Windows ?

If you own an EVK3 or iDS partner’s camera and it is not detected by Metavision SDK, then we advise you to verify that the correct driver is installed by following these steps (here, we give an example for EVK3 GENX320):

Open Device Manager

Locate the camera:

Expand

Universal Serial Bus devicesand check if the camera appears asEVKCX3

If the camera is not detected:

How can I fix the error ‘Entry Point Not Found’ when launching SDK applications on Windows?

When running Metavision SDK applications on Windows, you may hit the following error message: “Entry Point Not Found. The procedure entry point inflateValidate could not be located in the dynamic link library C:\Program Files\Prophesee\bin\libpng16.dll.”

This means the version of libpng included in Metavision is incompatible with some other libraries of your installation.

Most probably, there are multiple copies of zlib1.dll in your installation and the first one found by Windows is not ours.

First, check if multiple zlib1.dll can indeed be found in your installation.

For this open an explorer and search for zlib1.dll.

You should at least have one result in the folder C:\Program Files\Prophesee\third_party\bin

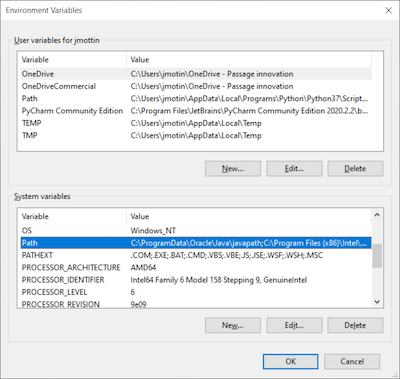

If you find multiple results, you need to reorder your Path environment variables and move Prophesee Paths first. Here is one way to do it:

open the start menu

search environment

select edit environment variables of the system

click environment variables

in system variables, locate Path

edit and reorder the environment variables to have Prophesee Paths at the top

How can I fix the error ‘the code execution cannot proceed because msvcp140.dll / msvcp140_1.dll was not found’ on Windows?

During installation of Metavision SDK on Windows, we check the presence of MS Visual C++, and we rely on the registry to know if the libs are there or not.

If you get this error, it could mean that the uninstall of the previous MS Visual C++ Redistributable was not done properly (without any visible error), and you might have a registry value without the installed software. In this case, the installation of Metavision SDK on Windows could lack MS Visual C++.

To fix the issue, please check if the MS Visual C++ Redistributable is installed. If not, then install it properly.

Ubuntu installation issues

How can I fix the error ‘Failed to load module “canberra-gtk-module”’ on Ubuntu?

Install the package required to display videos, as mentioned in the installation tutorial:

sudo apt -y install libcanberra-gtk-module

How can I fix the error ‘CMake Error Could NOT find Python3’ when compiling OpenEB on Ubuntu?

When compiling OpenEB on Ubuntu,

the generation of the makefiles

might fail with Could NOT find Python3 whereas you do have a Python version that we support.

This problem can be caused by a more recent version of Python present on your system that cmake is trying to use.

The error could be similar to:

$ cmake .. -DCMAKE_BUILD_TYPE=Release -DBUILD_TESTING=OFF

-- The C compiler identification is GNU 9.3.0

(...)

-- Found Boost: /usr/lib/x86_64-linux-gnu/cmake/Boost-1.71.0/BoostConfig.cmake (found version "1.71.0") found components: program_options filesystem timer chrono thread

CMake Error at /usr/share/cmake-3.16/Modules/FindPackageHandleStandardArgs.cmake:146 (message):

Could NOT find Python3 (missing: Python3_INCLUDE_DIRS Python3_LIBRARIES

Development Development.Module Development.Embed) (found version "3.9.5")

Call Stack (most recent call first):

/usr/share/cmake-3.16/Modules/FindPackageHandleStandardArgs.cmake:393 (_FPHSA_FAILURE_MESSAGE)

cmake/Modules/FindPython/Support.cmake:2999 (find_package_handle_standard_args)

cmake/Modules/FindPython3.cmake:391 (include)

cmake/custom_functions/python3.cmake:63 (find_package)

CMakeLists.txt:211 (include)

In that case, we can see that cmake found some version of the Python interpreter, but it then fails because

it does not find other required components from this specific version.

The solution is to specify to cmake which version of Python to use with the option -DPython3_EXECUTABLE=<path_to_python_to_use>.

For example to specify Python 3.9:

$ which python3.9

/usr/bin/python3.9

$ cmake .. -DCMAKE_BUILD_TYPE=Release -DBUILD_TESTING=OFF -DPython3_EXECUTABLE=/usr/bin/python3.9

-- The C compiler identification is GNU 9.3.0

(...)

-- Found Boost: /usr/lib/x86_64-linux-gnu/cmake/Boost-1.71.0/BoostConfig.cmake (found version "1.71.0") found components: program_options filesystem timer chrono thread

-- Found Python3: /usr/bin/python3.9 (found version "3.9.10") found components: Interpreter Development Development.Module Development.Embed

(...)

-- Configuring done

-- Generating done

-- Build files have been written to: /home/user/dev/openeb/build

Recorded files issues

How can I fix the error ‘No plugin available for input stream. Could not identify source from header’ when reading RAW files?

This error message can occur when trying to read a RAW file, for example with Metavision Viewer:

$ metavision_viewer -i /path/to/my_file.raw

[HAL][ERROR] While opening RAW file '/path/to/my_file.raw':

terminate called after throwing an instance of 'Metavision::HalException'

what():

------------------------------------------------

Metavision HAL exception

Error 101000: No plugin available for input stream. Could not identify source from header:

% Date 2021-02-03 11:53:21

% integrator_name Prophesee

(...)

------------------------------------------------

This problem can have multiple causes:

the Camera Plugins are not found. If you installed third party plugins or compiled plugins yourself, you need to specify the location of the plugin binaries using the

MV_HAL_PLUGIN_PATHenvironment variable (follow the camera plugins installation guide).the RAW file is not recognized. The RAW file header contains ASCII information that is used to detect what plugin to use when opening the file with Metavision SDK. If this header is missing some required metadata, opening the file will fail. For example, the error

No plugin availablecould be caused by a missing or malformedplugin_namein the header. Hence, it’s good practice to check this header with a text editor when hitting this error, and fix it if needed. Note that the application metavision_file_info prints some information about the RAW file (including theplugin_name) that could help fix the RAW file. Finally, if you have multiple RAW files to update, contact us so that we can provide you with a script.

Why do I get errors when trying to read recorded files (RAW or HDF5) with Studio or the API on Windows?

When trying to read a recorded file (RAW or HDF5),

you may receive some errors stating Unable to open RAW file or not an existing file

although the file does exist on your system.

For example with Metavision Viewer or Metavision Studio:

------------------------------------------------

Metavision SDK Stream exception

Error 103001: Opening file at c:\Users\name\Recordings\??.raw: not an existing file.

No such file or directory.

------------------------------------------------

Or when using EventsIterator from the Python API:

------------------------------------------------

Metavision HAL exception

Error 101000: Unable to open RAW file 'c:\Users\name\Recordings\piéton.raw'

------------------------------------------------

Those errors might be caused by some non-ANSI characters in the file path (either in the folder or the file name) like characters with accents or asian characters (chinese, japanese etc.). Until the SDK is enhanced to support those file paths properly, the workaround is to store your recording in pure-ANSI paths.

Cameras issues

Why is my camera not detected by the SDK?

When using SDK applications or API, you may face situations where your camera is properly plugged to your laptop but not detected by the software. For example when using Metavision Studio you would get the message “No cameras available, please check that a camera is connected”, when launching Metavision Viewer or following our Getting Started Guides, you would get the error “Camera not found. Check that a camera is plugged into your system and retry”, or when trying to get information about your camera using metavision_platform_info, it would output “No systems USB connected have been found on your platform”. In any of those cases, check the following points to find the root cause:

Make sure that your camera is detected by your Operating System.

On Linux, type

lsusbin a terminal and check that the camera appears in the list. If not, try other USB port and cables.On Windows, check if the camera is listed in the Device Manager in the “Universal Serial Bus Devices” section. If not, it could be caused by a permission problem during the drivers installation, so check the FAQ entry about installation of unsigned drivers on Windows. If the EVK is listed but the Properties tab shows a message similar to

No drivers are installed for this device, check the FAQ entry about this driver issue.If you are using a Prophesee EVK1 VGA or HD, your camera is not supported by the SDK since version 4.0.0, so make sure that you don’t have an SDK version more recent that 3.1. To download this version, access our SDK 3.1 page of the Download Center

If you are using a Prophesee EVK1 QVGA (old model supporting both CD and EM events), your camera is not supported by the SDK since version 2.0.0. So the only available software that you can use is the version 1.4.1 of our SDK that is available in the SDK 1.4.1 page of the Download Center

Make sure that the camera plugins were properly installed. This is specially important if you are using a camera from one of our partners. Follow the installation guide of the partner and see camera plugins installation guide for some information about the plugins.

If after those checks the camera is sill not detected, contact us by creating a support ticket with as much information as possible about your camera and platform (camera model, operating system, SDK version etc.)

How to resolve “EVKx device is not enumerated as a USB 3 SuperSpeed device” or device not recognized as USB3?

First, note that EVKs have been designed to avoid bandwidth bottlenecks due to communication interfaces. The bandwidth offered by USB 3.0 bulk endpoints is not guaranteed and can be shared with other devices connected to the same host controller. Prophesee recommends connecting only one USB device to the host controller for optimal performance.

If the issues persist after removing excess USB connections to the same host controller and ensuring the port is at least USB 3.0 compatible, you may be running into an issue related to the backwards compatibility from USB 3.0 to USB 2.0. In this case, please disconnect the cable from your PC USB 3.0 port and reconnect the cable with a more aggressive speed (still maintaining control to not damage the cable or port).

Also you can try to connect the USB A side to computer before connecting the USB C side to the EVK (for those with a USB C port).

Should the issues still persist, please contact us for further assistance.

Why do I get the message ‘Could not enumerate devices on board EVKv2 frame too short to be valid’ when using an EVK2?

This message means that the firmware of your EVK2 needs to be upgraded. To perform the upgrade, follow the procedure described in the Knowledge Center EVK2 upgrade page.

To have access to this page, request an account in the Knowledge Center and contact us (including a copy of the error message you get as well as the Serial Number of your camera) to have access to the EVK2 upgrade page.

Why do I get the message “[HAL][WARNING] Unable to open device: LIBUSB_ERROR_ACCESS” when using my EVK on Ubuntu?

This means that your camera was detected but the operating system does not allow you to access it. This can be caused by the absence of udev rules files for your camera. To add the missing files, you need to download OpenEB, copy the udev rules files in the system path and reload them with this command:

sudo cp <OPENEB_SRC_DIR>/hal_psee_plugins/resources/rules/*.rules /etc/udev/rules.d sudo udevadm control --reload-rules sudo udevadm trigger

Metavision Studio issues

What should I do when Studio shows the error “An error has been detected in the event stream, some data was corrupted and could not be recovered”?

This log message is raised by the EVT3 decoder

when detecting a NonMonotonicTimeHigh or InvalidVectBase protocol violation. See the section

Event Stream decoding in Metavision Studio

that explains how to configure and mitigate this situation.

What should I do when Studio shows the error “Metavision Studio internal error”?

This error might share the same root cause as the one described in the error ‘Entry Point Not Found’, which is discussed in that question.

A similar issue can also occur if the Metavision SDK was upgraded without fully uninstalling the previous version. To address this, we recommend properly uninstalling the current version and performing a fresh installation of the SDK.

What should I do when Studio shows empty windows, has missing UI elements or crashes randomly?

If none of the above questions about Studio internal errors and GPU related errors was of any help, you are probably hitting some currently unexplained behaviour of Metavision Studio that happens in some specific configurations of your operating system.

To help us provide more effective support, please collect log data using the following method:

set the following two environment variables in your terminal:

Linux

export ELECTRON_ENABLE_LOGGING=1 export MV_STUDIO_ENABLE_CLIENT_LOG=1 export MV_WINDOW_FAIL_DOM_DUMP_DIR=/home/JohnDoe/tmpWindows

set ELECTRON_ENABLE_LOGGING=1 set MV_STUDIO_ENABLE_CLIENT_LOG=1 set MV_WINDOW_FAIL_DOM_DUMP_DIR=C:\Users\JohnDoe\tmpFrom the same terminal, launch Metavision Studio and reproduce the actions that are causing the issue. This will generate logs directly in the terminal. We ask that you save these logs both as a plain text file and as an HTML file in the specified folder.

Please share these files with us along with a detailed description of the problem. Once we receive this information, we will analyze the issue and get back to you with potential fixes or workarounds.

What should I do when Studio shows the internal error code 3221225785 ?

When you install Metavision SDK, zlib1.dll is created in the following directory (for Windows OS):

C:\Program Files\Prophesee\third_party\bin\

However if multiple versions of zlib1.dll exist on your system and the first one found is not the correct one,

then Studio will fail to start.

To resolve this issue, several solutions are outlines in this forum post.

Other issues

How can I resolve a ModuleNotFoundError when running a Python script or importing a Python SDK module?

When running a Python script or importing an SDK module, you may encounter an error similar to:

ModuleNotFoundError: No module named 'metavision_core'

If this occurs, follow these steps to troubleshoot the issue:

On Linux, when SDK is installed with packages, the main command (

sudo apt -y install metavision-sdk) installs the Python bindings only for the default Python version of your Ubuntu distribution (e.g., Python 3.12 on Ubuntu 24). If you’re using a different supported Python version (e.g., Python 3.11 on Ubuntu 24), you will need to install an additional package with the following command:sudo apt -y install metavision-sdk-python3.11.Ensure that the SDK Python modules were properly deployed:

On Linux, if the SDK was installed via packages, modules should be located in:

/usr/lib/python3/dist-packagesOn Windows, if the SDK was installed using the installer, modules are found in:

C:\Program Files\Prophesee\lib\python3\site-packagesIf you built the SDK from source, the Python modules should be located in either:

The build folder where you compiled the SDK.

The deployment folder where you installed them.

Note

In the deployment directories mentioned above, you should find two types of modules:

Pure Python Modules: directories with Python Source code. For example metavision_core, metavision_core_ml etc.

Python modules implemented in C++, compiled as shared library files using Pybind. For example metavision_sdk_hal, metavision_sdk_cv etc.

For instance, a default installation on Ubuntu 24 using packages should include the following files and directories:

$ ls /usr/lib/python3/dist-packages | grep metavision metavision_core metavision_core_ml metavision_hal.cpython-312-x86_64-linux-gnu.so metavision_ml metavision_sdk_analytics.cpython-312-x86_64-linux-gnu.so metavision_sdk_base.cpython-312-x86_64-linux-gnu.so metavision_sdk_core.cpython-312-x86_64-linux-gnu.so metavision_sdk_cv3d.cpython-312-x86_64-linux-gnu.so metavision_sdk_cv.cpython-312-x86_64-linux-gnu.so metavision_sdk_ml.cpython-312-x86_64-linux-gnu.so metavision_sdk_stream.cpython-312-x86_64-linux-gnu.so metavision_sdk_ui.cpython-312-x86_64-linux-gnu.soEnsure that you are using a Python version compatible with the Metavision SDK. You can find the supported Python versions detailed in each installation guide. Additionally, a summary of supported versions is available in the Operating System Support Table.

Make sure that the Metavision SDK Python modules are accessible to Python. Depending on how you installed Python and the SDK, you may need to set the

PYTHONPATHenvironment variable. This variable should point to the directory where the Python modules were deployed (refer to the previous point for details). If you encounter any issues or are uncertain, it is recommended to set thePYTHONPATHexplicitly:Linux

export PYTHONPATH=/path/to/modulesWindows

set PYTHONPATH=\path\to\modules

What should I do when getting HAL error or warning message “Evt3 protocol violation detected”?

This log message is raised by the EVT3 decoder when detecting a protocol violation. The first counter-measure is to check if the event rate produced by the sensor can be reduced. To do so, check your lighting, focus, and sensor settings (biases, ROI, ESP…) as explained in the section First recording from live camera with Metavision Studio. You can also tune the behaviour of the EVT3 decoder to decide what protocol violation you want to detect.

How can I get some help if I was unable to fix my problem using online documentation?

This public online documentation covers many topics of our Metavision SDK offer, but more hardware documentation, application notes and support tools can be found in Prophesee Knowledge Center.

You can get access to premium content if you are a Prophesee customer by creating your Knowledge Center account.

If you are still unable to find an answer to your question, as a Prophesee customer, you can ask your question:

to the whole Prophesee event-based community in our Community Forum

to Prophesee Support Team using our support request ticketing tool

Note

While the forum is an excellent place to seek help and share insights, priority support is provided to Premium Support members through support tickets. Additionally, to stay updated with new posts and join the conversation in the forum, you can click the ‘follow’ button in the right menu to follow the community channel.