Note

This C++ sample has a corresponding Python sample.

Sparse Optical Flow Sample using C++

The Computer Vision API can be used to compute the optical flow of objects moving in front of the camera. The algorithm demonstrated in this sample computes optical flow in a sparse way: flow information is generated on clusters of events and not for each event. Some other optical flow algorithms are described in the “Available Optical Flow Algorithms” section below.

The sample metavision_sparse_optical_flow.cpp shows how to implement a pipeline for computing the sparse optical flow.

The source code of this sample can be found in <install-prefix>/share/metavision/sdk/cv/cpp_samples/metavision_sparse_optical_flow

when installing Metavision SDK from installer or packages. For other deployment methods, check the page

Path of Samples.

Expected Output

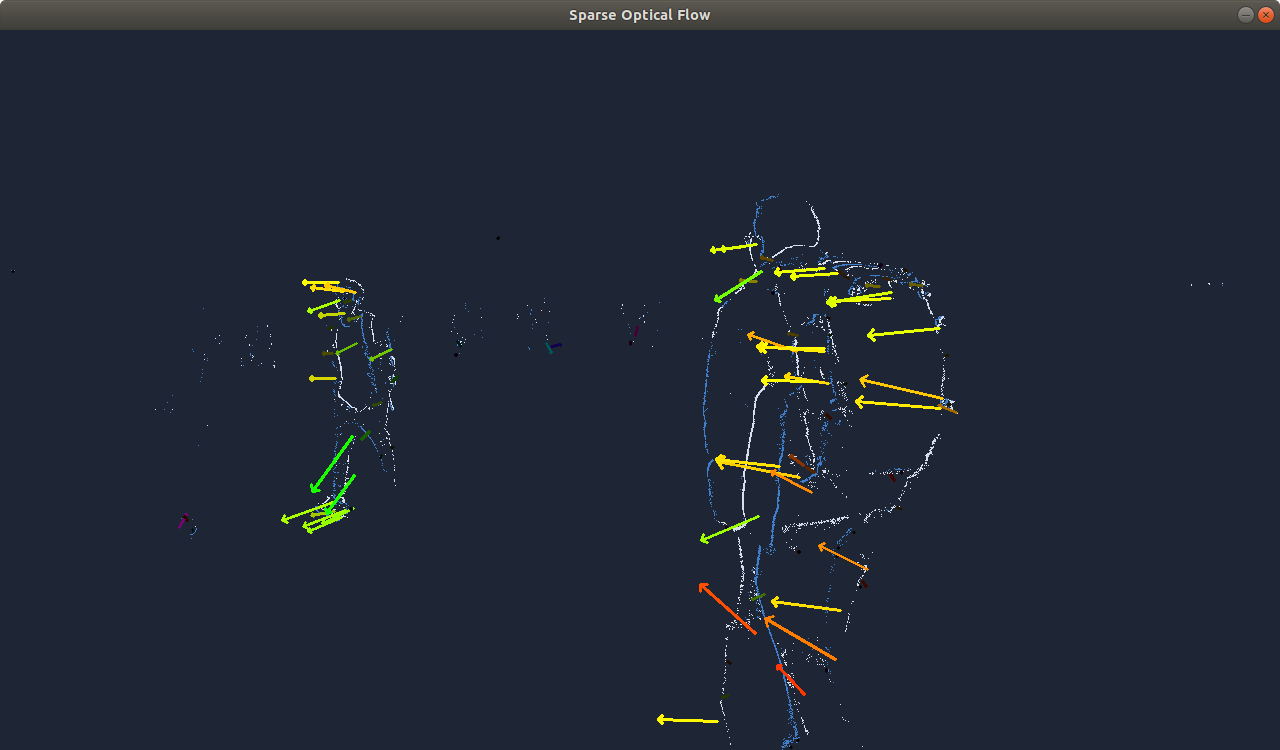

The sample visualizes events and the output optical flow with arrows indicating the direction and magnitude of motion:

The sample can also generate a video with the output flow.

How to start

You can directly execute pre-compiled binary installed with Metavision SDK or compile the source code as described in this tutorial.

To start the sample based on recorded data, provide the full path to a RAW or HDF5 event file (here, we use a file from our Sample Recordings):

Linux

./metavision_sparse_optical_flow -i pedestrians.hdf5

Windows

metavision_sparse_optical_flow.exe -i pedestrians.hdf5

Note

As explained in the Code Overview below, by default

a filter algorithm (Metavision::SpatioTemporalContrastAlgorithmT) is applied

to reduce the noise in the event stream. Depending on your input file, this might not be useful

(or it could even suppress most of the events if another noise filter was already applied when

recording the event file). To disable this filter, use the command line option --sw-stc-threshold 0

To start the sample based on the live stream from your camera, run:

Linux

./metavision_sparse_optical_flow

Windows

metavision_sparse_optical_flow.exe

To start the sample on live stream with some camera settings (biases, ROI, Anti-Flicker, STC etc.)

loaded from a JSON file, you can use

the command line option --input-camera-config (or -j):

Linux

./metavision_sparse_optical_flow -j path/to/my_settings.json

Windows

metavision_sparse_optical_flow.exe -j path\to\my_settings.json

To check for additional options:

Linux

./metavision_sparse_optical_flow -h

Windows

metavision_sparse_optical_flow.exe -h

Available Optical Flow Algorithms

This sample is using the Sparse Optical Flow algorithm, but the SDK API offers several other (dense) optical flow algorithms: Plane Fitting flow, Triplet Matching flow and Time Gradient flow. Those alternate algorithms are demonstrated in the Dense Flow Python Sample.

The main differences between those flow algorithms are the following:

Plane Fitting optical flow:

is based on plane-fitting in local neighborhood in time surface

is a simple and efficient algorithm, but run on all events hence is costly on high event-rate scenes

estimated flow is subject to noise and represents motion along edge normal (not full motion)

Triplet Matching optical flow:

is based on finding aligned events triplets in local neighborhood

is a simple and very efficient algorithm, but run on all events hence is costly on high event-rate scenes

estimated flow is subject to noise and represents motion along edge normal (not full motion)

Time Gradient optical flow:

is based on computing a spatio-temporal gradient on the local time surface using a fixed look-up pattern (i.e. it is essentially a simplified version of the Plane Fitting algorithm in which we only consider the pixels in a cross_shaped region (x0 +/- N, y0 +/- N) instead of a full NxN area around the pixel)

is a simple and very efficient algorithm, but run on all events hence is costly on high event-rate scenes

estimated flow is subject to noise and represents motion along edge normal (not full motion)

Sparse optical flow:

is based on tracking of small edge-like features

is more complex but staged algorithm, leading to higher efficiency on high event-rate scenes

estimated flow represents actual motion, but requires fine tuning and compatible features in the scene

See also

To know more about those flow algorithms, you can check the paper about Plane Fitting Flow, the paper about Triplet Matching Flow and the patent about CCL Sparse Flow

Code Overview

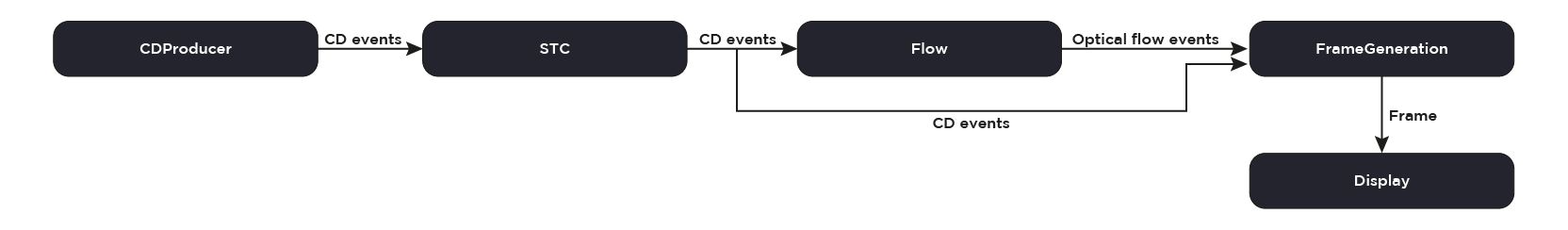

The Metavision Optical Flow sample implements the following data flow:

Spatio Temporal Contrast Filter

First, if the hardware STC of the sensor was not enabled via the camera setting file,

and if the option --sw-stc-threshold was not passed on the command line with the value 0,

then the filter algorithm Metavision::SpatioTemporalContrastAlgorithmT is applied

to reduce the noise in the event stream.

The filtered events are then sent to both the Metavision::SparseOpticalFlowAlgorithm and the Metavision::SparseFlowFrameGeneration.

Sparse Optical Flow Algorithm

The Metavision::SparseOpticalFlowAlgorithm is applied on the events stream and produces

Metavision::EventOpticalFlow events for each internally detected events cluster. Events are clustered

together based on their polarity and timestamp: clusters are formed from events connected spatially and temporally, and flow is

computed for them.

For each incoming event, we search in a 3x3 neighborhood around its position for connection with the most recent events. The connectivity is decided based on the events polarities and timestamps. When a connection is made, we retrieve the cluster ID of the most recent event. If the input event connects to two or more events, we merge the different cluster IDs into a merged ID. We then label the current event with the selected ID or merged ID, or with a new cluster ID if no connection was found.

Illustration of the clustering process for one input event

Once the cluster ID has been determined, the event information are passed to a speed estimator specific for this cluster.

The event’s position and timestamp are processed to update the cluster’s center and speed.

We use a Luenberger state estimator to evaluate those outputs. A Metavision::EventOpticalFlow instance is created with the cluster ID,

center and speed in pixel/s.

The parameters are preset for the sample. To see the full configuration possible, see Metavision::SparseOpticalFlowConfig.

Flow Frame Generator

The Metavision::SparseFlowFrameGeneration uses the Metavision::SparseFlowFrameGeneratorAlgorithm to generate

a frame that will be used later on by the display to visualize the result of the Metavision::SparseOpticalFlowAlgorithm

by rendering the estimated flows on top of the events.

The CD events and optical flow events are synchronized by buffering the incoming events and producing the image when

possible.

In the Metavision::SparseFlowFrameGeneration class, the method Metavision::SparseFlowFrameGeneration::set_output_callback(), allows adding a callback

that is called when output frames are ready to be displayed.

Display

The Metavision::Window class allows us to visualize the previously generated image on the screen:

Going further

Extract Speed Information

With the Metavision::EventOpticalFlow class, you can directly access speed vector components vx and vy of an event stream flow.

To do so, you can modify the C++ source code to save the flow_output variable in a CSV file where columns are respectively (x, y, p, t, vx, vy, id, cx, cy):

x - Column position of the event in the sensor

y - Row position of the event in the sensor

p - Polarity of the event

t - Timestamp of the event (in us)

vx - Speed in X axis in pixels per second in the image plane of the considered event

vy - Speed in Y axis in pixels per second in the image plane of the considered event

id - Feature ID of the considered event

cx - Horizontal coordinate of the center of the feature used to compute speed of the considered event

cy - Vertical coordinate of the center of the feature used to compute speed of the considered event

Speed information vx and vy is expressed in pixel/s.

cx and cy are not always computed for all optical flow algorithms. For example, they are computed in sparse optical flow algorithm and

set to zero in Triplet Matching optical flow algorithm.

First, add the following line in the header:

#include <fstream>

#include <sstream>

#include <vector>

Then, use the following function in the source code:

void writeCSV(const std::string& filename, const std::vector<Metavision::EventOpticalFlow>& flow_output) {

std::ofstream file(filename, std::fstream::app);

if (file) {

for (const auto& obj : flow_output) {

file << obj.x << ","

<< obj.y << ","

<< obj.p << ","

<< obj.t << ","

<< obj.vx << ","

<< obj.vy << ","

<< obj.id << ","

<< obj.center_x << ","

<< obj.center_y << "\n";

}

file.close();

} else {

std::cerr << "Error opening the file.\n";

}

}

Finally add a call to this function just after the call of the Flow algorithm process_events() function.

Essentially, replace this section of the code:

flow_algo.process_events(stc_output.begin(), stc_output.end(), std::back_inserter(flow_output));

With this one:

flow_algo.process_events(stc_output.begin(), stc_output.end(), std::back_inserter(flow_output));

// Write flow event in a csv file

writeCSV("all_flow_events.csv", flow_output);

Finally, compile and execute the sample.

This will create the file all_flow_events.csv with all the columns described above.