Note

This C++ sample has a corresponding Python sample.

Stereo Matching using C++

The CV3D API provides algorithms to estimate depthmaps from a synchronized and calibrated stereo event stream.

The sample metavision_stereo_matching.cpp shows how to:

load a stereo calibration model from a file describing the intrinsics and extrinsics in JSON format

use Metavision SDK SyncedCameraStreamsSlicer to retrieve event slices consistently from two synchronized cameras

use Metavision SDK algorithms to generate and match synchronized contrast maps

visualize the estimated depthmaps

The source code of this sample can be found in <install-prefix>/share/metavision/sdk/cv3d/cpp_samples/metavision_stereo_matching

when installing Metavision SDK from installer or packages. For other deployment methods, check the page

Path of Samples.

Warning

This stereo block matching pipeline is a sample version to showcase the principle of stereo depth estimation using synchronized event streams. Accuracy and runtime would still need to be improved for production use. For this reason and because runtime increases with the sensor resolution, this sample does not support live stereo event streams.

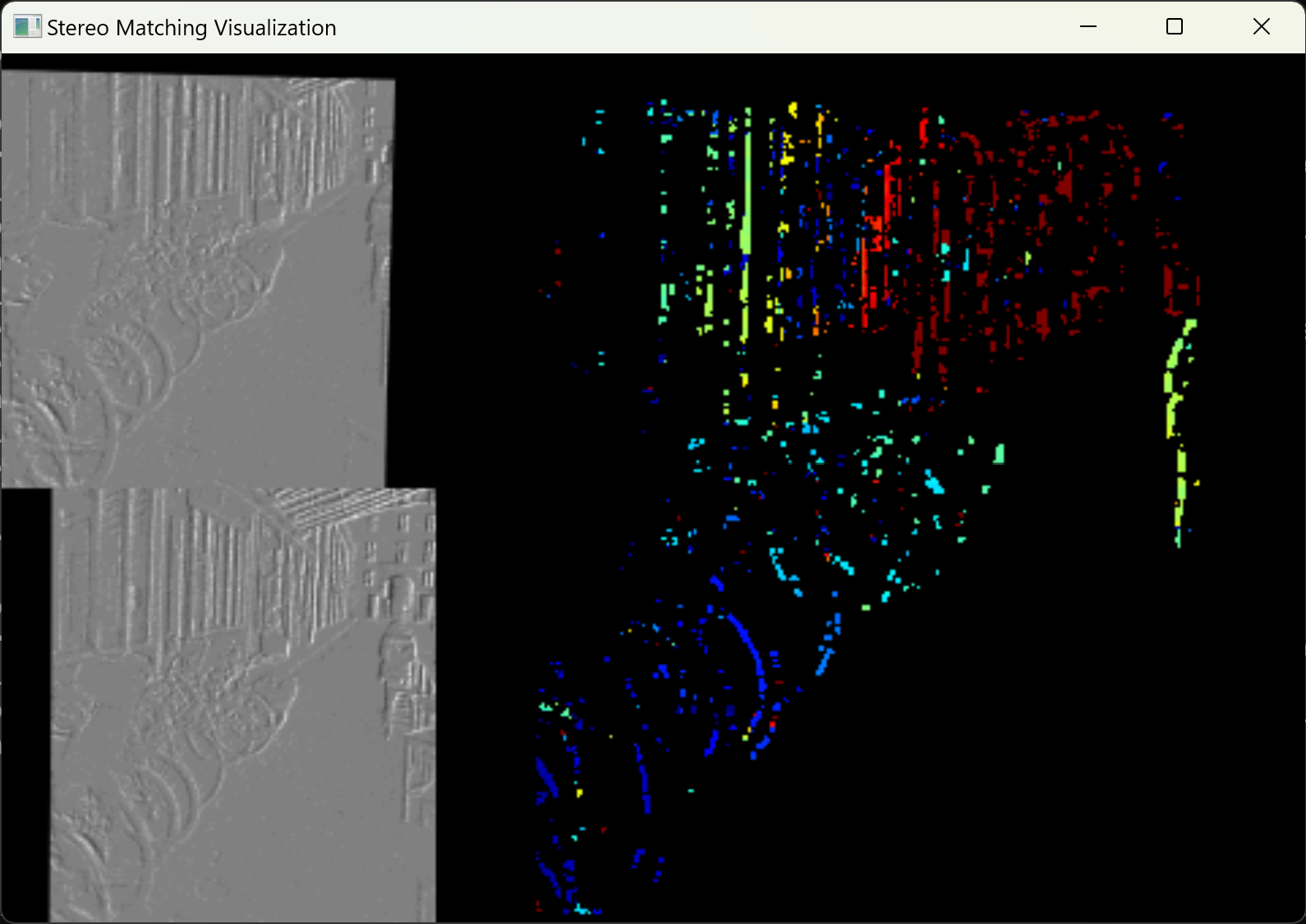

Expected Output

This sample opens a window and displays the rectified contrast maps from master and slave cameras on the left, and displays the color-mapped depthmap estimated using stereo block matching on the right, as shown in the following image:

You can also watch the sample in action in this video:

How to start

To run this sample, you will need to provide:

synchronized master and slave event recordings viewing the same scene,

a JSON file containing the intrinsics and extrinsics calibration of the master and slave cameras

Note

The recording courtyard_walk_stereo listed in the sample recordings page

can be used to test this sample.

To run the sample, first, compile the sample as described in this tutorial.

Then, to start the sample based on the live stream from your camera, run:

Linux

./metavision_stereo_matching -i <path_to_master_recording> -j <path_to_slave_recording> -c <path_to_calibration>

Windows

metavision_stereo_matching.exe -i <path_to_master_recording> -j <path_to_slave_recording> -c <path_to_calibration>

To check for additional options:

Linux

./metavision_stereo_matching -h

Windows

metavision_stereo_matching.exe -h

Calibration file format

This sample expects to be given a JSON calibration file containing the intrinsics and extrinsics calibration of the master and slave cameras. The JSON file may contain the following nodes:

proj_master: a dictionary containing the intrinsics parameters of the master cameraproj_master_path: a string representing the path to the intrinsics calibration file of the master cameraproj_slave: a dictionary containing the intrinsics parameters of the slave cameraproj_slave_path: a string representing the path to the intrinsics calibration file of the slave camerapose_slave_master: a dictionary containing the extrinsics parameters mapping master camera coordinates to slave camera coordinates

Either proj_master or proj_master_path must be present in the JSON file. The same applies to proj_slave and proj_slave_path.

The intrinsics parameters are represented as a dictionary with the following keys:

- type: a string representing the type of the camera model. Only PINHOLE is currently supported by the stereo matching algorithms.

- width: an integer representing the width of the camera image

- height: an integer representing the height of the camera image

- K: a list of 9 floats representing the intrinsic matrix of the camera, in row-major order

- D: a list of 5 floats representing the distortion coefficients of the camera

The extrinsics parameters are represented as a dictionary with the following possible keys:

- T: a list of 16 floats representing the 4x4 master to slave transform matrix, in row-major order

- rvec: a list of 3 floats representing the rotation vector of the master to slave transform matrix

- tvec: a list of 3 floats representing the translation vector of the master to slave transform matrix

Either T, or rvec and tvec must be present in the JSON file.

The following is an example of a JSON calibration file:

{ "proj_master": { "description": "right camera", "type": "PINHOLE", "width": 320, "height": 320, "K": [284.826868839897, 0.0, 180.1176851085463, 0.0, 284.826868839897, 65.33424557032014, 0.0, 0.0, 1.0], "D": [-0.0006165445620834394, -0.010756857721293627, 0.0007368814791982024, 0.00021834472440317886, 0.0] }, "proj_slave": { "description": "left camera", "type": "PINHOLE", "width": 320, "height": 320, "K": [284.1171049513969, 0.0, 92.56905432351442, 0.0, 284.1171049513969, 128.88043203708568, 0.0, 0.0, 1.0], "D": [-0.014835951608123964, -0.0024538708512070514, 0.0008345248956209603, -0.0008806256086698127, 0.0] }, "pose_slave_master": { "T": [0.99957275, -0.01918195, -0.02205372, 0.17025425990742402, 0.01814686, 0.99876672, -0.04621387, 0.00048763471099667595, 0.02291299, 0.04579392, 0.9986881, -0.0020931137065557668, 0, 0, 0, 1] } }

The following is another example of a valid JSON calibration file:

{ "proj_master_path": "/path/to/intrinsics_master.json", "proj_slave_path": "/path/to/intrinsics_slave.json", "pose_slave_master": { "rvec": [0.0, 0.0, 0.0], "tvec": [0.0, 0.0, 0.0] } }