Example of Building a Complex Pipeline

The code sample Metavision Composed Viewer found in the Core module will be used here to show how to build a more complex pipeline with non-linear connections (e.g. multiple inputs and multiple outputs). We don’t implement any custom stage in this sample: this example includes only stages that already exist in Metavision SDK.

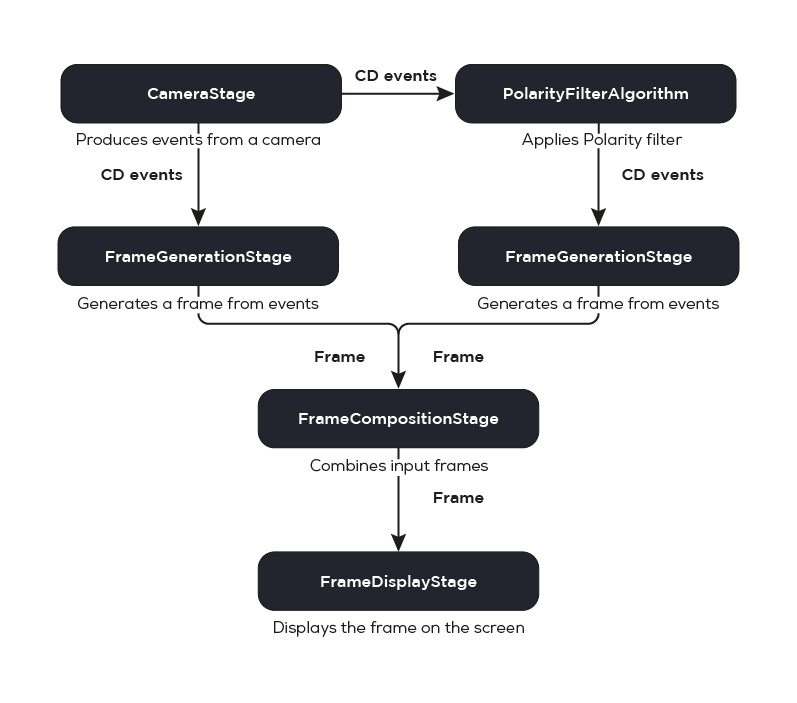

The sample demonstrates how to acquire events data (from a live camera or an event file), filter events and show a frame

combining unfiltered and filtered events. It also shows how to set a custom consuming callback on a

FrameCompositionStage instance, so that it can consume data from multiple

stages.

The pipeline can be represented by this graph:

The sample includes only the main function that creates the pipeline and connects the stages:

26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 | int main(int argc, char *argv[]) { std::string event_file_path; const std::string program_desc("Code sample demonstrating how to use Metavision SDK CV to filter events\n" "and show a frame combining unfiltered and filtered events.\n"); po::options_description options_desc("Options"); // clang-format off options_desc.add_options() ("help,h", "Produce help message.") ("input-event-file,i", po::value<std::string>(&event_file_path), "Path to input event file (RAW or HDF5). If not specified, the camera live stream is used.") ; // clang-format on po::variables_map vm; try { po::store(po::command_line_parser(argc, argv).options(options_desc).run(), vm); po::notify(vm); } catch (po::error &e) { MV_LOG_ERROR() << program_desc; MV_LOG_ERROR() << options_desc; MV_LOG_ERROR() << "Parsing error:" << e.what(); return 1; } if (vm.count("help")) { MV_LOG_INFO() << program_desc; MV_LOG_INFO() << options_desc; return 0; } // A pipeline for which all added stages will automatically be run in their own processing threads (if applicable) Metavision::Pipeline p(true); // Construct a camera from a file or a live stream Metavision::Camera cam; if (!event_file_path.empty()) { cam = Metavision::Camera::from_file(event_file_path); } else { cam = Metavision::Camera::from_first_available(); } const unsigned short width = cam.geometry().width(); const unsigned short height = cam.geometry().height(); const Metavision::timestamp event_buffer_duration_ms = 2; const uint32_t accumulation_time_ms = 10; const int display_fps = 100; /// Pipeline // // 0 (Camera) ---------------->---------------- 1 (Polarity Filter) // | | // v v // | | // 2 (Frame Generation) 3 (Frame Generation) // | | // v v // |------>----- 4 (Frame Composer) ----<-----| // | // v // | // 5 (Display) // // 0) Stage producing events from a camera auto &cam_stage = p.add_stage(std::make_unique<Metavision::CameraStage>(std::move(cam), event_buffer_duration_ms)); // 1) Stage wrapping a polarity filter algorithm to keep positive events auto &pol_filter_stage = p.add_algorithm_stage(std::make_unique<Metavision::PolarityFilterAlgorithm>(1), cam_stage); // 2,3) Stages generating frames from the previous stages auto &left_frame_stage = p.add_stage(std::make_unique<Metavision::FrameGenerationStage>(width, height, accumulation_time_ms), cam_stage); auto &right_frame_stage = p.add_stage( std::make_unique<Metavision::FrameGenerationStage>(width, height, accumulation_time_ms), pol_filter_stage); // 4) Stage generating a combined frame auto &full_frame_stage = p.add_stage(std::make_unique<Metavision::FrameCompositionStage>(display_fps)); full_frame_stage.add_previous_frame_stage(left_frame_stage, 0, 0, width, height); full_frame_stage.add_previous_frame_stage(right_frame_stage, width + 10, 0, width, height); // 5) Stage displaying the combined frame const auto full_width = full_frame_stage.frame_composer().get_total_width(); const auto full_height = full_frame_stage.frame_composer().get_total_height(); auto &disp_stage = p.add_stage( std::make_unique<Metavision::FrameDisplayStage>("CD & noise filtered CD events", full_width, full_height), full_frame_stage); // Run the pipeline and wait for its completion p.run(); return 0; } |

Instantiating a Pipeline

First, we instantiate Pipeline

using Pipeline::Pipeline(bool auto_detach) constructor:

Metavision::Pipeline p(true);

We pass auto_detach argument as true to make the pipeline run all stages in their own processing threads.

Adding Stages to the Pipeline

Once the pipeline is instantiated, we add stages to it.

As the first stage, we add the CameraStage used to produce CD events from a camera

or a RAW file:

auto &cam_stage = p.add_stage(std::make_unique<Metavision::CameraStage>(std::move(cam), event_buffer_duration_ms));

Then, we add the PolarityFilterAlgorithm to filter events and keep only

events of the given polarity.

auto &pol_filter_stage = p.add_algorithm_stage(std::make_unique<Metavision::PolarityFilterAlgorithm>(1), cam_stage);

Then, we add two FrameGenerationStage stages to generate frames from

the output of the two previous stages. Note that we call twice the same function, with the only difference being the

previous stage: cam_stage for the first and pol_filter_stage for the second.

auto &left_frame_stage = p.add_stage(

std::make_unique<Metavision::FrameGenerationStage>(width, height, display_fps, true, accumulation_time_ms),

cam_stage);

auto &right_frame_stage = p.add_stage(

std::make_unique<Metavision::FrameGenerationStage>(width, height, display_fps, true, accumulation_time_ms),

pol_filter_stage);

Then, we add the FrameCompositionStage.

This stage can be used to generate a single frame showing side by side the output of two different producers,

in this case left_frame_stage and right_frame_stage.

Here, we specify the previous stages with

FrameCompositionStage::add_previous_frame_stage

function:

auto &full_frame_stage = p.add_stage(std::make_unique<Metavision::FrameCompositionStage>(display_fps));

full_frame_stage.add_previous_frame_stage(left_frame_stage, 0, 0, width, height);

full_frame_stage.add_previous_frame_stage(right_frame_stage, width + 10, 0, width, height);

Finally, as the last stage, we add the FrameDisplayStage to display the final

combined frame on the screen.

auto &disp_stage =

p.add_stage(std::make_unique<Metavision::FrameDisplayStage>("CD & noise filtered CD events"), full_frame_stage);

Running the Pipeline

Now, when the pipeline is set up, and all stages are added, we run the pipeline by calling

Pipeline::run() function:

p.run();

After this example, you can move to an an example of Pipeline with a Custom Stage.