Note

This C++ sample has a corresponding Python sample.

Object Counting using C++

The Analytics API provides algorithms to count small fast moving objects.

The sample metavision_counting.cpp shows how to count and display the objects passing in front of the camera.

Objects are counted per lines (by default, 4 horizontal lines are used), and we expect objects to move from top to bottom, as in free-fall. Each object is counted when it crosses one of the horizontal lines. The number of lines and their positions can be specified using command-line arguments.

The source code of this sample can be found in <install-prefix>/share/metavision/sdk/analytics/cpp_samples/metavision_counting

when installing Metavision SDK from installer or packages. For other deployment methods, check the page

Path of Samples.

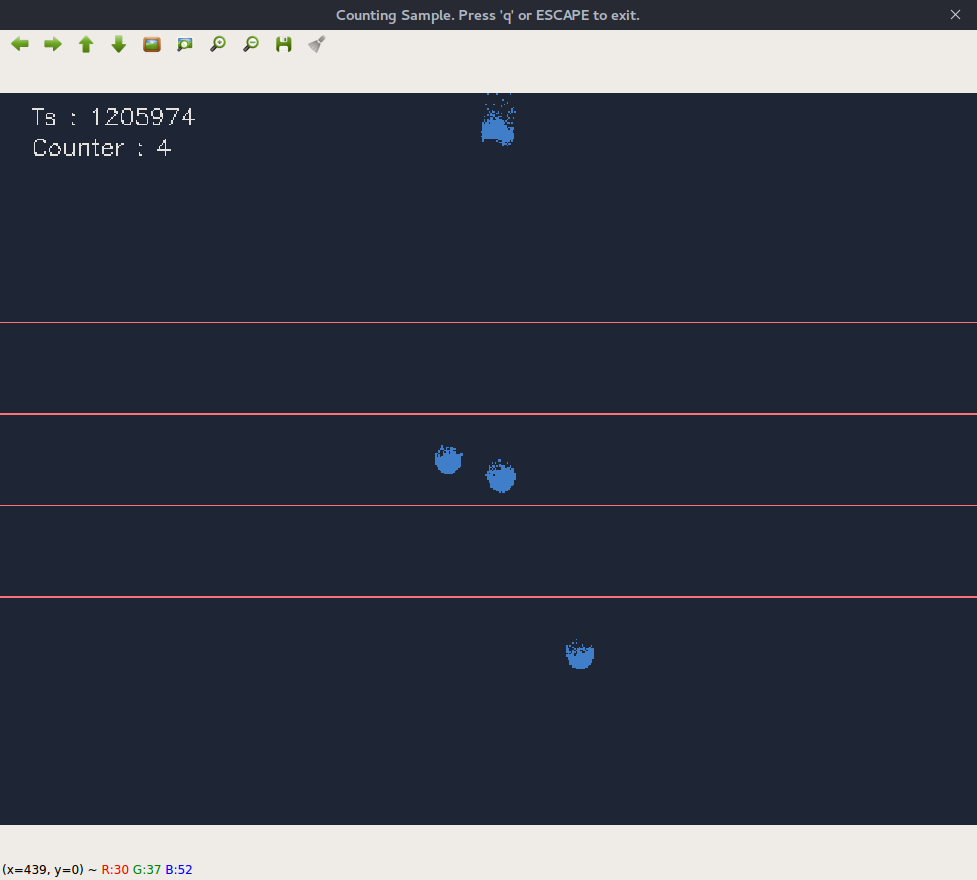

Expected Output

Metavision Counting sample visualizes the events (from moving objects), the lines on which objects are counted, and the total object counter:

Setup & requirements

To accurately count objects, it is very important to fulfill some conditions:

the camera should be static and the object in focus

there should be good contrast between the background and the objects (using a uniform backlight helps to get good results)

set the camera to have minimal background noise (for example, remove flickering lights)

the events triggered by an object passing in front of the camera should be clustered as much as possible (i.e. no holes in the objects to avoid multiple detections)

Also, we recommend to find the right objective/optics and the right distance to objects, so that an object size seen by the camera is at least 5 pixels. This, together with your chosen optics, will define the minimum size of the objects you can count.

Finally, depending on the speed of your objects (especially for high-speed objects),

you might have to tune the sensor biases to get better data (make the sensor faster and/or less or

more sensitive) using the --input-camera-config option (see below).

How to start

You can directly execute pre-compiled binary installed with Metavision SDK or compile the source code as described in this tutorial.

To start the sample based on recorded data, provide the full path to a RAW or HDF5 event file (here, we use a file from our Sample Recordings):

Linux

./metavision_counting -i 80_balls.hdf5

Windows

metavision_counting.exe -i 80_balls.hdf5

To start the sample based on the live stream from your camera, run:

Linux

./metavision_counting

Windows

metavision_counting.exe

To start the sample on live stream with some camera settings (like the biases mentioned above, or ROI, Anti-Flicker, STC etc.)

loaded from a JSON file, you can use

the command line option --input-camera-config (or -j):

Linux

./metavision_counting -j path/to/my_settings.json

Windows

metavision_counting.exe -j path\to\my_settings.json

To check for additional options:

Linux

./metavision_counting -h

Windows

metavision_counting.exe -h

Code Overview

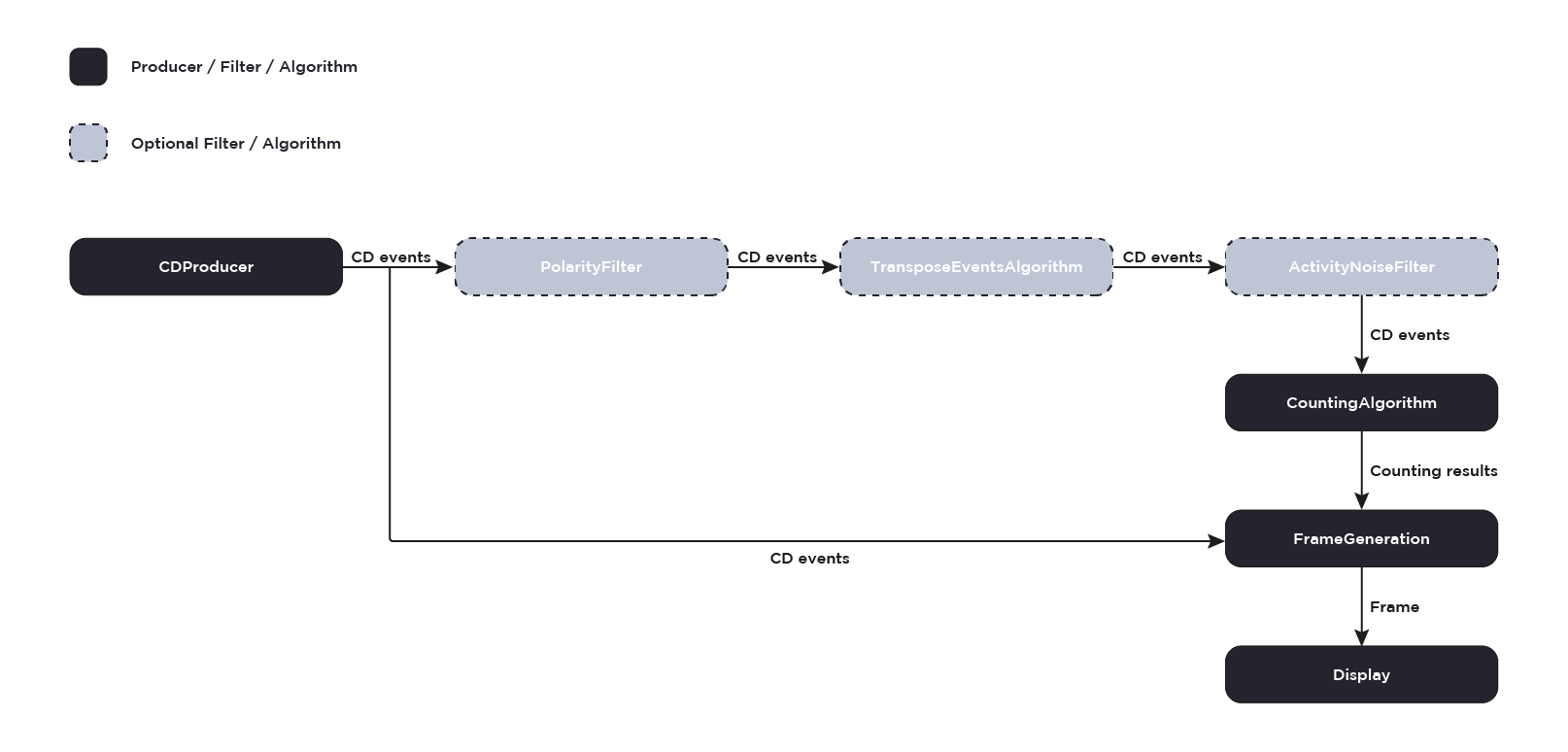

Pipeline

Metavision Counting sample implements the following pipeline:

Optional Pre-Processing Filters/Algorithms

To improve the quality of initial data, some pre-processing filters can be applied upstream of the algorithm:

Metavision::PolarityFilterAlgorithmis used to select only one polarity to count the objects. Using only one polarity allows to have the sharpest shapes possible and prevents multiple counts for the same object.Metavision::TransposeEventsAlgorithmallows to change the orientation of the events. Note thatMetavision::CountingAlgorithmrequires objects to move from top to bottom, and if your setup doesn’t allow it, then this filter/algorithm is useful for changing the orientation of events.Metavision::ActivityNoiseFilterAlgorithmaims to reduce noise in the events stream that could produce false counts.

Note

These filters are optional: experiment with your setup to get the best results.

Counting Algorithm

This is the main algorithm in this sample. The algorithm is configured to count objects of a given size

passing from top to bottom in front of the camera.

To create an instance of Metavision::CountingAlgorithm, we first need to gather some configuration

information, such as the size of the objects to count, their speed and their distance from the camera.

The size of those objects in the camera’s image plane depends on the

optic used, their distance to the camera and their speed.

The Metavision::CountingCalibration class allows to compute these parameters and pass them to the

algorithm.

Once we have a valid calibration, we can create an instance of Metavision::CountingAlgorithm:

// CALIBRATION

const auto calib_results = Metavision::CountingCalibration::calibrate(

sensor_width, sensor_height, object_min_size_, object_average_speed_, distance_object_camera_);

// CountingAlgorithm

counting_algo_ = std::make_unique<Metavision::CountingAlgorithm>(

sensor_width, sensor_height, calib_results.cluster_ths, calib_results.accumulation_time_us);

The Metavision::CountingAlgorithm relies on the use of lines of interest to count the objects passing in

front of the camera and produces Metavision::MonoCountingStatus as output. A

Metavision::MonoCountingStatus consists of both a global counter and local counters (i.e. one per line).

Local counters are incremented every time an object crosses their corresponding line, while the global counter is

the maximum of all the local counters. These counters are not reset between two calls but updated

throughout the sequence. The algorithm is implemented in an asynchronous way which allows to retrieve new counters

estimations at a fixed refresh rate rather than getting them for each processed buffer of events. As these counters are

mainly used for visualization purposes, the asynchronous approach has been proven to be more efficient in this case.

Frame Generation

At this step, we generate an image that will be displayed when the sample is running. In this frame are displayed:

the events

the lines of interest used by the algorithm

the global counter

The Metavision::EventsFrameGeneratorAlgorithm class is used to ease the synchronization between the events

and the counting results. This class allows to buffer input events

(i.e. Metavision::EventsFrameGeneratorAlgorithm::process_events()) and generate an image on demand

(i.e. Metavision::EventsFrameGeneratorAlgorithm::generate_image_from_events()). After the event image is

generated, the counting related overlays (i.e. lines and counter) are rendered using the

Metavision::CountingDrawingHelper class.

As the output images are generated at the same frequency as the Metavision::MonoCountingStatus produced by the Metavision::CountingAlgorithm,

the image generation is done in the Metavision::CountingAlgorithm’s output callback:

void Pipeline::counting_callback(const std::pair<timestamp, Metavision::MonoCountingStatus> &counting_result) {

if (!is_processing_)

return;

const timestamp &ts = counting_result.first;

const timestamp &last_count_ts = counting_result.second.last_count_ts;

const int count = counting_result.second.global_counter;

if (counting_drawing_helper_) {

events_frame_generation_->generate(ts, back_img_);

counting_drawing_helper_->draw(ts, count, back_img_);

if (video_writer_)

video_writer_->write_frame(ts, back_img_);

if (window_)

window_->show(back_img_);

}

current_time_callback(ts);

increment_callback(ts, count);

inactivity_callback(ts, last_count_ts, count);

}

while the buffering of the events is done in the Metavision::Camera’s output callback:

void Pipeline::camera_callback(const Metavision::EventCD *begin, const Metavision::EventCD *end) {

if (!is_processing_)

return;

// Adjust iterators to make sure we only process a given range of timestamps [process_from_, process_to_]

// Get iterator to the first element greater or equal than process_from_

begin = std::lower_bound(begin, end, process_from_,

[](const Metavision::EventCD &ev, timestamp ts) { return ev.t < ts; });

// Get iterator to the first element greater than process_to_

if (process_to_ >= 0)

end = std::lower_bound(begin, end, process_to_,

[](const Metavision::EventCD &ev, timestamp ts) { return ev.t <= ts; });

if (begin == end)

return;

/// Apply filters

bool is_first = true;

apply_filter_if_enabled(polarity_filter_, begin, end, buffer_filters_, is_first);

apply_filter_if_enabled(transpose_events_filter_, begin, end, buffer_filters_, is_first);

apply_filter_if_enabled(activity_noise_filter_, begin, end, buffer_filters_, is_first);

/// Process filtered events

apply_algorithm_if_enabled(events_frame_generation_, begin, end, buffer_filters_, is_first);

apply_algorithm_if_enabled(counting_algo_, begin, end, buffer_filters_, is_first);

}

Note

Different approaches could be considered for more advanced applications.

Note

While filtered events are used by the Metavision::CountingAlgorithm, raw events are used for the display.

Display

Finally, the generated frame is displayed on the screen. The following image shows an example of output: